1. 설치 환경

- AWS EC2 t2-medium 3대(master 1대, worker 2대)

- 선정 근거: 공식 문서에 최소 권장 사양이 CPU 2Core에 Memory 2GB이기 때문

참고 공식 문서

Installing kubeadm

This page shows how to install the kubeadm toolbox. For information how to create a cluster with kubeadm once you have performed this installation process, see the Using kubeadm to Create a Cluster page. Before you begin A compatible Linux host. The Kubern

kubernetes.io

2. master node 생성

- EC2 생성

- 생성정보

- Instance type: t2.medium

- OS: Ubuntu 20.10

- SSD: 8GB

- Name: master

- Security Group: 공식 문서의 권장 포트는 모두 해제

- hostname 변경

- 경로: /etc/hostname

- 기존: ip-10-0-1-228

- 변경: master

- reboot

3. Container Runtime Interface 설치

- 지원 CRI

- Docker (설치)

- Containered

- CRI-O

- 아래 공식 사이트 참고하여 설치

Install Docker Engine on Ubuntu

docs.docker.com

- 설치 요약

- sudo apt-get remove docker docker-engine docker.io containerd runc

- sudo apt-get update

- sudo apt-get install apt-transport-https ca-certificates curl gnupg lsb-release

- curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

- sudo apt-get update

- sudo apt-get install docker-ce docker-ce-cli containerd.io

4. kubeadm 설치

- sudo apt-get update

- sudo apt-get install -y apt-transport-https ca-certificates curl

- sudo curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

- echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

- sudo apt-get update

- sudo apt-get install -y kubelet kubeadm kubectl

- sudo apt-mark hold kubelet kubeadm kubectl

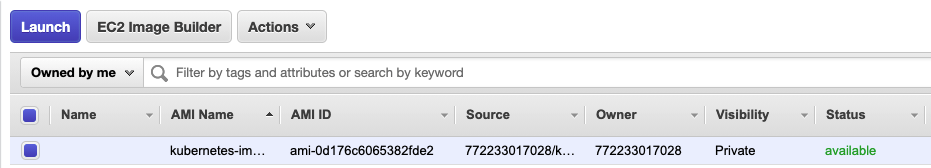

5. AMI 생성

- kubelet kubeadm kubectl이 설치된 EC2를 AMI로 생성

- 생성된 AMI로 work1, work2 용 EC2 생성

6. kubeadm init 수행

- 마스터 노드에서 root 계정으로 kubeadm init 수행

- 마스터 노드에서 아래 스크립트 수행

-

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

7. worker 노드 추가

- worker 노드에서 아래 스크립트 수행하여 worker 노드 추가

kubeadm join 10.0.1.228:6443 --token nh1mww.vw7fq72f53m6jcba \

--discovery-token-ca-cert-hash sha256:53c88cb516b751cd5a87f44ff8b4027c043953b77d02c7d7deec0640702b53c58. Cluster Networking 설정

- weave net 설치 해 봤으니 이번엔 calico 설치

- calico는 kubernetes 유형별 설치 방법이 다양하게 존재함

- 이 중 "Install Calico networking and network policy for on-premises deployments" 참고

Install Calico networking and network policy for on-premises deployments

Install Calico networking and network policy for on-premises deployments.

docs.projectcalico.org

- calico 설치 확인

더보기

ubuntu@master:~$ curl https://docs.projectcalico.org/manifests/calico.yaml -O

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 185k 100 185k 0 0 213k 0 --:--:-- --:--:-- --:--:-- 213k

ubuntu@master:~$ kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget

poddisruptionbudget.policy/calico-kube-controllers created9. 최종 상태 확인

ubuntu@master:~$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-78d6f96c7b-7jkzf 1/1 Running 0 37s

calico-node-qmrml 0/1 Running 0 37s

calico-node-th24k 0/1 Running 0 37s

calico-node-w7hvr 0/1 Running 0 37s

coredns-558bd4d5db-g42l8 0/1 ContainerCreating 0 57m

coredns-558bd4d5db-gdmhx 0/1 ContainerCreating 0 57m

etcd-master 1/1 Running 0 57m

kube-apiserver-master 1/1 Running 0 57m

kube-controller-manager-master 1/1 Running 0 57m

kube-proxy-26l5j 1/1 Running 0 57m

kube-proxy-k544z 1/1 Running 0 46m

kube-proxy-s86n4 1/1 Running 0 46m

kube-scheduler-master 1/1 Running 0 57m

ubuntu@master:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 57m v1.21.1

work1 Ready <none> 47m v1.21.1

work2 Ready <none> 47m v1.21.1'Private Cloud > Kubernetes' 카테고리의 다른 글

| 프라이빗 레지스트리에서 이미지 받기(pulling image from private registry) (0) | 2021.10.16 |

|---|